Structured Content (Not AI) Will Determine the Future of What We Know

Understanding AI operations as an information ecosystem

Those who control the information architecture will control the future of our knowledge.

While I have been cautiously approaching this idea, I don’t think this is hyperbole.

The more I work with content and AI, the more obvious this becomes.

Here is an example that stands out.

While working with Claude on a LinkedIn post about my teaching methods, I noticed some wonky AI behavior that could easily be ascribed to the content I fed AI.

I had uploaded a collection of my own writing about structured prompting and asked Claude to help me craft a post about using these techniques in the classroom.

What I got back was technically accurate but strangely repetitive.

No matter what angle I asked for—student engagement, assignment design, or assessment strategies—Claude kept working in the same phrase: "Effective AI collaboration isn't just about better prompts—it's about designing the information environment around the task."

This sentence was definitely in my source material, and apparently Claude had decided it was the central insight worth emphasizing in every context.

While true, it wasn't particularly relevant to a post about helping students learn to write better research questions for AI. But the AI seemed convinced this was the key message, inserting variations of it regardless of what I actually wanted to communicate.

Then it hit me: the AI wasn't generating content based on my specific context. It was searching through the information I'd provided, finding patterns that seemed related to my request, and synthesizing whatever it deemed most important.

That repeated phrase wasn't creative emphasis—it was the AI treating my own writing like a “search database” and returning what it calculated as the most relevant result.

This realization completely reframed my understanding of what was happening during AI interactions. I wasn't getting repetitive responses because the AI lacked nuance.

I was getting “search results” from my own content, filtered through the AI's pattern recognition, without any guidance about what information was actually relevant to my specific goal.

This brings to mind one question: Who is going to give guidance to AI about the massive loads of information that we now throw at it (which is likely to get bigger and bigger)?

Its becoming clear that the people who understand information organization will determine how knowledge flows through our society.

While others debate whether AI will replace human intelligence or student cheating, the real power lies in learning how to design information that guides AI toward better outcomes.

AI as Search Engine

For the past two years, most of us have been thinking about AI slightly wrong, especially those of us who work with content.

Educational institutions should begin integrating information design thinking into AI literacy curricula. Students need to understand not just how to use AI tools, but how to organize and present information in ways that guide AI toward better outcomes. This requires connecting information science principles with practical AI applications.

We've treated large language models as sophisticated text generators—digital authors that create content from some mysterious creative process or black box.

But its way more interesting than that. These systems function as dense information ecosystems where knowledge exists in interconnected patterns throughout billions of neural pathways.

This understanding gets crucial support from recent analysis of how neural networks actually store and access information. As AI Substacker, Devansh, explains in his analysis of LLM architecture, "neurons are no longer neutral — each update risks overwriting existing, valuable information, leading to unintended consequences across the network."

Modern LLMs are "immense collections of interconnected neurons" where "most neurons are densely packed with critical insights."

This analysis illuminates why fine-tuning often fails to inject new knowledge effectively—it's not adding information to empty spaces, but overwriting existing patterns in what Devansh calls a "delicate ecosystem of an advanced model."

When we understand LLMs as information ecosystems rather than blank canvases, the search paradigm becomes a little more obvious.

When you prompt GPT-4 or Claude, you're not asking it to manufacture new text. You're requesting a search through this ecosystem, followed by synthesis of the relevant patterns it discovers.

The "generation" is actually sophisticated pattern matching and recombination based on the search parameters you provide through your prompt structure.

Instead of trying to prompt for pure creativity, we should focus on providing better search guidance. Instead of expecting AI to invent knowledge, we should help it navigate the information it already contains more precisely.

Consider this practical example from my own work. When I approach Claude with the prompt "Write about project management," I get generic, textbook-style responses because I'm essentially asking it to search randomly through management-related patterns.

But when I frame the same request as "Help me understand the core principles that make project management frameworks effective," I'm providing specific search parameters that help the AI locate information patterns related to principles, frameworks, and effectiveness.

The quality of AI responses depends heavily on how well we guide the search process through the information ecosystem. We're not programming the AI to think differently; we're helping it find what it already knows more efficiently.

If AI functions primarily as a search and synthesis tool, then our leverage comes from mastering information design rather than prompt engineering tricks. The most effective improvements come from better organizing and presenting information TO the AI, not from trying to modify the underlying model itself.

In fact, that’s what the best prompts do already.

Information Typing & Precision Content

My journey toward understanding information architecture began with frustration, if I’m honest.

Like many technical communicators, I wrestled with DITA (Darwin Information Typing Architecture) and similar structured authoring systems that seemed to often impose arbitrary constraints on natural writing processes.

I definitely could see the advantages of structured content, but found it difficult to apply in more fluid contexts like my own research, teaching, or even kinds of information beyond the strictly technical.

But AI gives these structures more meaning. Suddenly, those "arbitrary" information types provide navigational structure not just for humans, but also machines.

My recent completion of Precision Content’s information typing course really helped me see this in a better light.

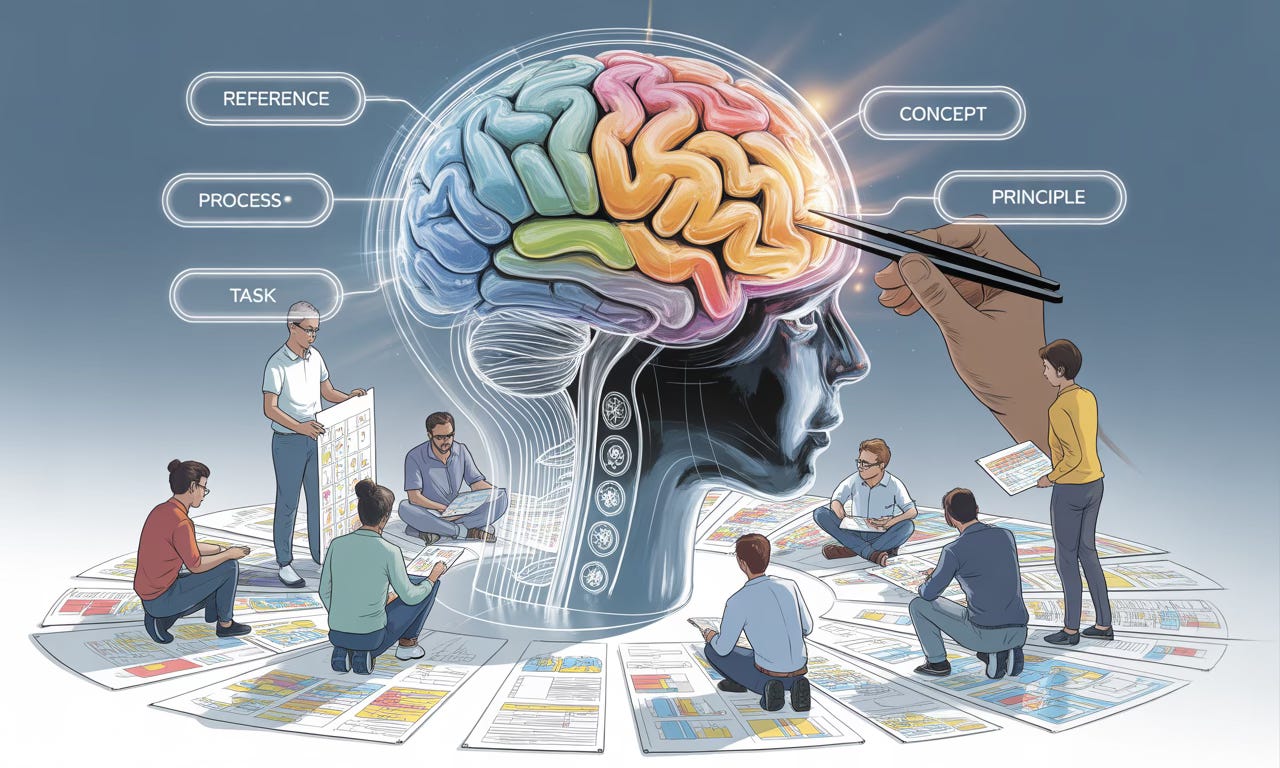

The course identifies five fundamental information types that appear across cultures and contexts.

Reference (what something is)

Concept (how to think about something)

Principle (why something works)

Process (how something unfolds), and

Task (steps to accomplish something).

These probably look familiar if you know anything about DITA. These aren't just academic or DITA categories. They represent distinct patterns of how information functions in real communication that help us be more precise with how AI uses information, instead of arbitrarily experimenting.

Previously, I might have provided Claude with a messy document containing mixed information types and asked for help with "content strategy." The result would be generic advice drawing randomly from different parts of the source material.

Now, I separate the same information into labeled components.

Reference information about my audience demographics

Conceptual frameworks for content effectiveness

Principles of engagement psychology

Processes for content development

Specific tasks for implementation.

When I ask Claude to help with content strategy, it can search each information type appropriately, maintaining the functional integrity that makes each type useful.

The precision comes from working with how information naturally organizes itself, both in human cognition and in AI training data.

When we provide clear information design, we're essentially giving AI better instructions for navigating its own knowledge ecosystem or the knowledge ecosystem we give it.

The Puzzle Paradigm

In this light, the conversation metaphor that dominates AI interaction design fundamentally misses how high-value AI work actually happens.

Conversations are fluid, improvisational, and forgiving of ambiguity. This is great for exploration, but when AI work matters—when you need reliable, reusable, and scalable results—you need systematic thinking.

I've started approaching AI collaboration more like solving complex puzzles. This metaphor captures something essential about information work that the conversation model obscures. Success depends on:

understanding the overall goal

identifying the pieces you have to work with

recognizing relationships between pieces, and

organizing everything systematically before expecting meaningful results.

Many people and organizations remain stuck in the "dump the puzzle box and hope" phase. They throw unstructured information at AI tools and iterate through conversations until something useful emerges.

This approach can work for simple questions or one-off tasks, but it breaks down quickly for complex, repeated, or high-stakes applications.

The puzzle approach requires different thinking. Before engaging with AI, I:

map out what I'm trying to accomplish (the completed picture),

inventory the information and resources I have available (the individual pieces)

analyze how these elements relate to each other (edge patterns and groupings)

and organize everything into logical categories (sorting pieces by color, pattern, or function).

This systematic preparation transforms how AI responds to my requests. Instead of searching randomly through its training data, the AI can locate relevant patterns more precisely and maintain coherence across complex tasks.

I’ve mostly been using Claude these days, which can get frustrating at times. The data and conversation limits are much smaller than ChatGPT or Gemini. But I still find it works better with my custom content.

I can't simply upload massive folders of unorganized documents, so I'm forced to curate, structure, and label my information thoughtfully. The constraint becomes a forcing function for better information design.

Time and again, I've seen small, well-structured knowledge bases outperform large collections of unorganized documents.

The systematic organization allows AI to locate relevant information efficiently and maintain functional relationships between different pieces of knowledge.

The Power of Information Design

What I've discovered through thousands of AI interactions is that structured thinking gets amplified in AI responses. When information is clearly organized and appropriately labeled, AI systems can preserve and extend its functional characteristics more effectively than when working with unstructured materials.

This creates significant advantages for anyone working with complex information, whether you're conducting research, teaching students, or developing technical documentation.

The most valuable graduates won't be those who can write the most clever prompts, but those who can organize information effectively for both human understanding and AI collaboration. This represents a fundamental literacy—as important as critical thinking or statistical reasoning—that transcends specific technologies or career paths.

The phenomenon extends beyond individual productivity gains. Organizations that develop systematic approaches to knowledge management position themselves to leverage AI capabilities more effectively than competitors who treat AI as a magic black box.

It also allows companies to provide more accuracy, better personalization, and improved bias.

This also has implications for education and research.

Instead of treating AI literacy as primarily a technical skill, we should focus on developing information design thinking. Students who learn to analyze, organize, and structure information effectively will have enormous advantages in AI-augmented fields.

Academic institutions that recognize this shift early can help their students develop these essential capabilities across disciplines.

The most valuable graduates won't be those who can write the most clever prompts, but those who can organize information effectively for both human understanding and AI collaboration.

This represents a fundamental literacy—as important as critical thinking or statistical reasoning—that transcends specific technologies or career paths.

Practical Implementation

For individuals looking to restructure their AI workflow, the path forward centers on developing information design habits.

Start by auditing your current information practices. How do you organize documents, notes, and knowledge resources? Are you labeling information by function and purpose, or simply storing everything chronologically or by topic?

Begin experimenting with information typing in your AI interactions. Instead of providing AI with raw, unstructured materials, spend time identifying whether your information represents concepts, principles, processes, tasks, or reference data (or other categories that might be meaningful to your work). Label these explicitly when working with AI systems.

Develop modular thinking about your knowledge work. Rather than approaching each AI interaction as an isolated conversation, consider how the information architecture you create for one task might serve multiple future applications. The effort invested in structuring information pays dividends across repeated use.

Start with pilot projects that demonstrate the value of structured approaches. Choose a specific domain where your organization regularly engages with AI—customer service, content creation, technical documentation—and invest in proper information design for that area. The performance improvements will make the case for broader implementation.

Right now, I’m experimenting withs structuring information about my study abroad to Poland that will serve as a guide for leaders and students, but also potentially an AI assistant.

More on that to come soon.

Educational institutions should begin integrating information design thinking into AI literacy curricula. Students need to understand not just how to use AI tools, but how to organize and present information in ways that guide AI toward better outcomes. This requires connecting information science principles with practical AI applications.

The frameworks and tools for this work already exist. Information design principles from library science, technical communication, and knowledge management provide solid foundations.

The missing piece is recognizing these as essential AI collaboration skills rather than just specialized technical knowledge.

The Future Belongs to Information Designers

We're witnessing the emergence of information design as an essential 21st century literacy. Just as the printing press elevated the importance of writing skills and the internet made digital literacy crucial, AI systems are making information design a core competency for professional success.

We're not just users of AI technology; we're designers of information ecosystems that determine what AI can accomplish.

The opportunity is enormous for those who recognize this shift while others continue treating AI as magical technology. The organizations, educators, and individuals who invest in systematic thinking about information will have substantial advantages over competitors who rely on trial-and-error approaches to AI collaboration.

The future of knowledge belongs to those who understand that the real power lies not in the AI systems themselves, but in how we design the information that guides them.

We're not just users of AI technology; we're designers of information ecosystems that determine what AI can accomplish.

The conversation about AI often focuses on what machines can do to us or for us. But the more important question is what we can do with machines when we understand how to structure information effectively.

The answer is: we can build knowledge systems that preserve human wisdom while leveraging artificial capabilities at larger scale.

As AI capabilities continue expanding, the value of good information design will only increase. Those who develop these skills now will shape how AI develops and deploys in the future. Those who ignore information design will find themselves dependent on systems designed by others, with little agency over the outcomes.

There is little research out there on these kinds of integrations and much of what is happening in the professional world is happening behind closed doors.

In fact, many of the results I see personally in my AI interactions are untested.

So this summer I will be working on collecting some of these design approaches for AI information that go beyond just prompting, developing testing methodologies, and demonstrating how good content can make AI work.

This also begins my journey into knowledge graphs, which is the pinnacle of information design.

My goal is to share my experience and research to non-experts and educators in ways that can more broadly applicable … not just in the realm of technology or technical writing.

I hope you stay along for the ride.

Aha! But what IS information? From what I can see, there are as many definitions of information as there are disciplines. When you tell me to design my infornation, what are you really telling me?

This was useful food for thought - thanks.